SQL Transactions and their impact on performance

Table of Contents

Mydex CIC explains how they made a 94.5% improvement in the speed of large batch SQL transactions with just a few lines of code.

Rather than rely on a giant monolithic database for supporting the needs of our members and subscribers, Mydex CIC Personal Data Stores (PDS) are discrete, isolated stores per member. This carries many advantages in terms of contention management, resilience, and risk reduction on a platform that is serving many requests to or from PDS of many different members, 24/7 by many different subscribers they approved as a connection.

Among many other open source technologies, Mydex CIC makes use of SQLite (or rather, SQLCipher, an enhanced version of it with encryption capabilities) as a core tenet of the PDS.

As the Mydex Safe, Secure Cloud platform has evolved over the years, we have had to support a variety of traffic models, payload sizes and dataset structures for the needs of the members, and therefore for the subscribers delivering data into the member’s PDS on their behalf.

Mydex CIC has recently been at the core of a project with Blackwood Homes and Care, the University of Edinburgh and others called Peoplehood. You can read more about it here and also how it even had a direct outcome in rapid response to a health incident for an elderly person participating in the project. This project sees a large size of batch data payloads delivered into participants’ PDS at regular, high rates.

This article explores our experience with batch inserts, the implications for large volumes of insertion of transactional data in applications that use SQL, and its impact on performance. We explain how we made a 94.5% performance improvement with just 3 lines of code.

#

How SQLite works with inserts

SQLite is different from other database systems, in that the database is a file on a filesystem. In its native form, there is no running daemon managing connection pools.

This has implications for locking. In other database designs like MySQL or PostgreSQL, the daemon may manage connections and handle queuing, locking and the like. (Third party, add-on features exist for SQLite that try to tackle this problem, such as Litestream, but they usually only work with native SQLite and not enhanced encryption variants like SQLCipher)

In SQLite, being a file, the locking mechanisms of the underlying filesystem are relied upon.

We won’t go into a deep dive of SQLite internals when it comes to locking, and would recommend you read the official documentation.

Suffice it to say that SQLite needs to obtain a lock on the file in order to perform an insert. To avoid corruption issues, all sorts of other very arcane subtle matters are also dealt with by the OS, such as flushing filesystem caches/buffers, etc. Note that we are not using write-ahead logging (WAL). It all adds up.

#

Our batch inserts

To aid our subscribers, we introduced the ability to send batch data in payloads to the Mydex CIC PDX API for inserting many rows at once (with certain limits in place).

At the backend, our API essentially looped over each individual item in the batch payload and performed an SQL ‘INSERT’.

The pseudocode, with uninteresting bits omitted, essentially looks like this:

1try {

2 // (unshown: open database and decrypt it)

3 foreach ($payload as $row) {

4 $this->dbo->exec("INSERT INTO [....]");

5 }

6 // (unshown: close the database)

7} catch Exception {

8 // (unshown: throw error)

9}

#

Each insert is its own transaction

In SQLite, pretty much every activity on the database implicitly starts its own transaction. This is important for ACID compliance: it ensures that if something goes wrong with inserting the row, it can be automatically rolled back.

The problem is that with every insert, that’s a full round trip of opening the file, obtaining a lock, inserting the data, flushing caches, releasing the lock, etc.

When you are inserting many rows in one HTTP request, this adds up!

To make matters worse, in our particular case, each insert, update or deletion of a row of data into the member’s PDS, also writes a ’log’ entry into a separate internal table of the member’s PDS. Mydex CIC doesn’t use this table (nor can our subscribers access it), but it serves as a kind of append-only audit trail for the member themself to inspect activity made against their PDS.

Thus, with every data insert (within a batch of inserts), we are writing not just the data, but metadata about the data. Two inserts, two transactions, per row in the batch payload. It adds up even more!

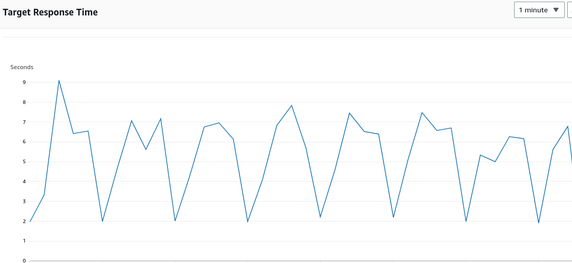

Performing some simulation tests in an internal development environment, we measured the time between batch requests:

As can be seen, with this particular type of batch, on an already busy platform, requests were taking something like 7 seconds to complete. This was simply unacceptable.

#

Contention issues with this model

Aside from the poor experience of such slow requests, with such a rapid-fire rate of transactions, contention issues also start to emerge when other subscribers (or the member themselves) want to write to their PDS, and to a lesser extent, read from it.

Concurrent INSERT requests may be met with a ‘database is locked’ error. SQLite has internal features that support a back-off/sleep/retry approach (known as the busyTimeout) to try and deal with this gracefully.

However, even if that eventually works, the time spent waiting for the lock to free up is passed down either directly as ‘slowness’ from a UX perspective, or simply errors that may result in loss of consecutive transmission of data (e.g gaps).

#

Wrapping all inserts into one transaction

Trying to resolve these issues led us down a deep dive into SQLite internals and lots of research to see what others do in these situations.

We learned that it is possible - and practically always a good idea - to ‘wrap’ all inserts in the one transaction.

How is that done?

In short, you first declare that you wish to begin a transaction immediately. Without this, SQLite implicitly starts a transaction at the moment that it comes across the first INSERT, but would also then commit (or rollback) - and therefore end - that transaction after that same INSERT.

To appropriately allow such a request to be informed that the database is busy (as another transaction may have been started), we use the ‘BEGIN IMMEDIATE’ statement. This allows us to code around whether to back off/retry (and log the situation) or return a ‘PDS is busy’ response to the API client, allowing our subscribers to decide what to do.

The pseudocode now looks like this:

1try {

2 // (unshown: open database and decrypt it)

3 $this->dbo->exec("BEGIN IMMEDIATE TRANSACTION");

4 foreach ($payload as $row) {

5 $this->dbo->exec("INSERT INTO [....]");

6 }

7 $this->dbo->exec("COMMIT");

8} catch Exception {

9 $this->dbo->exec("ROLLBACK");

10 // (unshown: gracefully retry the above up to N times.

11 // finally throw error if still failing)

12}

This helps avoid deadlocks, and you can see that other platform frameworks have evolved to make the same decision when using SQLite.

#

Performance benefits

We were initially skeptical of how much benefit the mere adding of a ‘BEGIN IMMEDIATE TRANSACTION’ followed by a ‘COMMIT’ would have, on either side of our ’loop’ of the batch payload.

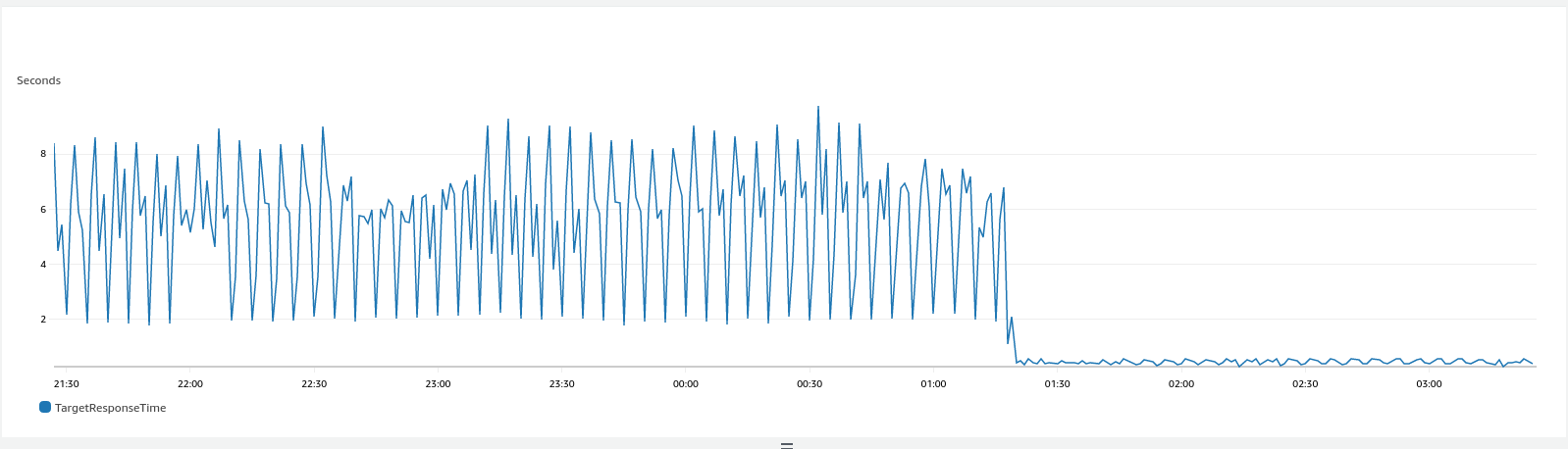

We were quickly convinced! Can you see in the below graph (of our HTTP response times) the moment where we deployed our fix?

Crunching the numbers, where $Speedup = ($PreviousTime - $CurrentTime) / $PreviousTime , we can compute this as the following:

$PreviousTime = 7 to 9 seconds (average = (7 + 9) / 2 = 8 seconds)

$CurrentTime = 0.38 to 0.5 seconds (average = (0.38 + 0.5) / 2 = 0.44 seconds)

$Speedup = (8 seconds - 0.44 seconds) / 8 seconds

$Speedup = 7.56 seconds / 8 seconds

$Speedup ≈ 0.945

So, this means it has improved by about 94.5%.

#

Consistency benefits

One area we glossed over above is the importance of the COMMIT and the ROLLBACK.

Nothing is actually written to the SQLite database until the COMMIT statement occurs - and therein lies the performance win - one write request to the underlying file, right at the end. (The magic is that if you need to, you can nonetheless still glean information from your INSERTs, such as the ’last insert ID’ even though the insert hasn’t actually been inserted!).

If something goes wrong with any of the INSERT statements (perhaps one of the rows in the batch is malformed or some other issue occurs that prevents SQLite inserting the data), the ROLLBACK will ensure all of the requests are abandoned - none of them succeed in writing to the database.

Returning to our ‘activity log’ / audit trail system mentioned earlier, this is really important. We don’t want a situation where the actual data insert fails, but the activity log still succeeds at writing a statement that the former did complete. Nor the reverse: that the data was written but then some sort of short-circuit meant the activity log did not capture that event in its audit trail.

In any situation where you are grouping together modifications that in some way relate to one another, you’ll want to use the techniques described, to ensure that problems are just as consistently reverted as they would, in normal circumstances, be written.

#

Conclusion

If you are designing for data-intensive applications, you’ll want to wrap your database modification statements in a transaction.

To avoid later eventual deadlocks that arise when a ‘read-only’ lock upgrades to a ‘write’ exclusive lock, you should use BEGIN IMMEDIATE (not just BEGIN). You may still get ‘SQLITE_BUSY’ errors, but the difference is that you’ll get them immediately and can therefore code around it (e.g implement retries or return information that the client should itself wait and retry later).

Having said that, there is a sheer performance win from wrapping many inserts in a single transaction. This dramatically reduces ‘database is locked’ problems in the first place, because the requests complete so fast that the probability of colliding with another active write request is now very small.

Don’t forget to code in ROLLBACK to appropriately revert any pending changes should an exception occur.

#

Similar articles

As is so often the case, we are certainly not the first to learn of the benefits of wrapping all SQL inserts in a single transaction. But we won’t be the last, either!

We’d like to thank the authors of the following articles for helping us get to where we needed to be.

Official SQLite 3 documentation

Blog posts

- Understanding SQLITE_BUSY (wonderful diagrams here)

- Squeezing performance from SQLite transactions

- The SQLite lock timeout nightmare

Forums