Implementing Static Analysis into our Continuous Integration workflow

Table of Contents

Mydex CIC describes their technique for running Static Analysis using tools such as SonarQube and Trivy, via a Jenkins pipeline, with results being sent back into an active Github pull request as part of the peer review lifecycle.

In today’s software development landscape, ensuring high code quality and security is crucial for delivering reliable and maintainable applications. Static code analysis, a technique that examines code without needing to run it, plays a pivotal role in identifying a variety of problems early in the development lifecycle. This article explores how Mydex CIC integrated several open source tools such as Trivy and SonarQube into our Continuous Integration workflow, between our Git repositories and our Jenkins CI service.

#

Why Use Static Code Analysis?

Static code analysis offers several benefits that contribute to overall software quality:

- Early Issue Detection: By analyzing code statically, issues such as bugs, security vulnerabilities, and coding standards violations can be detected before they are released

- Consistent Code Quality: Static analysis tools enforce coding standards and best practices uniformly across the codebase. This promotes cleaner, more maintainable code and reduces technical debt over time. It also aids in onboarding of new developers who need to quickly learn the codebase

- Enhanced Security: Identifying security vulnerabilities early in the development process helps mitigate risks and ensures compliance with security standards and regulations.

- Improved Developer Productivity: By automating the analysis process, developers receive immediate feedback on their code changes. This accelerates the development cycle and improves productivity by focusing efforts on writing code rather than debugging. It also leads to better developer habits, often resulting in better quality of future code.

#

How we used to do Static Analysis

We use Jenkins for driving our CI and CD pipelines. We previously used to invoke Trivy and SonarQube scans of the codebase after or during a deployment of our apps once they have passed through a PR phase and have been merged into our main branches.

The problem with this approach is that the static analysis was happening too late in the process. The developers could of course see and be notified of problems found in the analysis, but it was annoying that they would have to follow up with another deployment because of issues found post-PR merge.

It could even impact morale if there was a sense of achievement pushing out a release, only to have robots throw warnings and alerts in your face about out of date dependencies or a bug that was missed in visual review, or other tests somehow.

Rather than be forever chasing our tail tidying up after ourselves, it is far better to advise of such issues before the PR even gets a chance to be merged.

#

Our Static Analysis Stack and Developer Benefits

At Mydex CIC, we have made a few decisions that impact where and how we detect changes in a git repository and what we do with those changes. Most significantly, our CI stack runs in an internal-only network that isn’t accessible to the outside world. This means we aren’t running Github-hosted Actions CI runners. It also means things like Github can’t POST webhook payloads into our Jenkins service because it is not reachable from the public internet in this way.

The other decision we have made is that we don’t integrate with other components at Github such as Dependabot, at least not without further discussion and review of implications as to who could access the data (granted, we allow Github access to our repositories, and Github own Dependabot since 2019, but still).

As a result, our CI workflows look a little different to many others seen in blog posts such as this.

Mainly, the order is reversed: Jenkins is polling the Github repositories as an outbound request, and when it detects new commits in a branch that has a pull request open (and that PR is not in ‘draft’ status), it pulls that code and runs a battery of tests. It also then submits the results of those tests as a comment in the Github issue.

The Static Analysis Stack involves a combination of Trivy and SonarQube scans wrapped in two separate custom python scripts which we deploy with Ansible to our Jenkins server.

For the purposes of this article, we will focus on the SonarQube custom script, as it is more interesting.

##

Script Overview

The following Python script integrates SonarQube and GitHub APIs to perform static code analysis at the pull request (PR) level:

Click to see the script

1#!/usr/bin/python3

2

3import argparse

4import requests

5import re

6

7token = "{{ your_sonar_export_token }}"

8host = "https://{{ your_sonar_url }}"

9

10parser = argparse.ArgumentParser()

11parser.add_argument(

12 "-s", "--sonar", type=str, dest="sonar", help="The sonar key of the Sonar project"

13)

14parser.add_argument(

15 "-p",

16 "--pull-request",

17 type=str,

18 dest="pr_number",

19 help="The pull request number",

20)

21parser.add_argument(

22 "-r",

23 "--repo",

24 type=str,

25 dest="repo",

26 help="The git repo name",

27)

28args = parser.parse_args()

29

30# GitHub API

31headers = {

32 "Accept": "application/vnd.github+json",

33 "Authorization": "Bearer {{ your_github_personal_access_token }}",

34 "X-GitHub-Api-Version": "2022-11-28",

35}

36

37api_url = f"https://api.github.com/repos/{{ your_github_organisation }}/{args.repo}"

38

39# Metrics we care about

40metric_keys_list = [

41 "bugs",

42 "code_smells",

43 "vulnerabilities",

44]

45metric_keys = ",".join(metric_keys_list)

46

47# Fetch our sonar from the Sonar API

48session = requests.Session()

49session.auth = token, ''

50call = getattr(session, 'get')

51

52url = f"{host}/api/projects/search?projects={args.sonar}"

53res = call(url).json()

54

55# Sonar link to the project

56sonar_link = f"{host}/dashboard?id={args.sonar}"

57# This list will hold our metrics

58metrics = []

59for sonar in res["components"]:

60 component_key = sonar["key"]

61 url = f"{host}/api/measures/component?component={component_key}&metricKeys={metric_keys}"

62 res = call(url).json()

63 for metric in res["component"]["measures"]:

64 for metric_type in metric_keys_list:

65 if metric["metric"] == metric_type:

66 count = metric["value"]

67 # Append an entry to the 'metrics' list for this metric name and count

68 metrics.append(f"{metric_type}: {count}")

69

70# Now we have all our metrics, convert it into a string again, separated by commas

71metrics_joined = ", ".join(sorted(metrics))

72print(f">>> Current Sonar metrics: {metrics_joined}")

73# And now we have it as a string, we can make it the value of the 'body' key as our

74# 'comment' dict, for posting to Github

75comment = {"body":f"Sonar just analysed this branch, and can report the following metrics: {metrics_joined}"}

76

77# Switch to post comment to GitHub PR

78add_stats = False

79

80# Switch to detect changes

81changed = False

82

83# Var to gather changes

84changes = ""

85

86# In order to detect changes, we first need to list the PR comments

87def get_pagination(url, headers):

88 """

89 Hits the URL and returns a list of any

90 'next' and 'last' URLs in the Link header.

91

92 If the 'next' URL is the same as the 'last' header,

93 just return the first URL.

94 """

95 r = requests.get(url, headers=headers, )

96 links = r.links

97 next_link = links.get("next", False)

98 last_link = links.get("last", False)

99

100 links = []

101 # Always append the original URL to the links list

102 links.append(url)

103 if next_link and last_link:

104 links.append(next_link["url"])

105 if next_link["url"] != last_link["url"]:

106 links.append(last_link["url"])

107

108 return links

109

110# Main URL of PR comments

111url = f"{api_url}/issues/{args.pr_number}/comments"

112# Get list of links to paginate on (in addition to the main URL above).

113# We aren't parsing the comments themselves here yet, we're just

114# getting a list of URLs to then loop over to *then* fetch the comments

115comment_pages = get_pagination(url, headers)

116

117sonar_comments = []

118# Iterate over each comment page and append a list of sonar comments

119for comment_page in comment_pages:

120 r = requests.get(comment_page, headers=headers, ).json()

121 if r:

122 for c in r:

123 # If this is one of the 'Sonar' comments, append it to our sonar_comments list

124 # (we don't want to look at the other comments)

125 if 'Sonar' in c['body']:

126 print(f"Appending the comment with id {c['id']} which appeared on page {comment_page}")

127 sonar_comments.append(c)

128

129if not sonar_comments:

130 print("No Sonar comments in PR, sending")

131 add_stats = True

132else:

133 # Get last Sonar comment

134 last_sonar_comment = sonar_comments.pop()

135 print(f">>> Last Sonar comment: {last_sonar_comment['body']}")

136

137 # Extract individual metrics from the last Sonar comment

138 last_bugs = re.findall('bugs: ' + r'\d+', last_sonar_comment['body'])[0].split(':')[1]

139 last_vulnerabilities = re.findall('vulnerabilities: ' + r'\d+', last_sonar_comment['body'])[0].split(':')[1]

140 last_code_smells = re.findall('code_smells: ' + r'\d+', last_sonar_comment['body'])[0].split(':')[1]

141

142 bugs_metrics_value = sorted(metrics)[0].split(':')[1]

143 code_smells_metrics_value = sorted(metrics)[1].split(':')[1]

144 vulnerabilities_metrics_value = sorted(metrics)[2].split(':')[1]

145

146 # Comparing old metrics with the new ones and construct the new comment

147 if last_vulnerabilities > vulnerabilities_metrics_value:

148 changed = True

149 changes += f" * Vulnerabilities have reduced :+1: from {last_vulnerabilities} to {vulnerabilities_metrics_value}\n"

150 if last_vulnerabilities < vulnerabilities_metrics_value:

151 changed = True

152 changes += f" * Vulnerabilities have increased :warning: from {last_vulnerabilities} to {vulnerabilities_metrics_value}\n"

153 if last_bugs > bugs_metrics_value:

154 changed = True

155 changes += f" * Bugs have reduced :+1: from {last_bugs} to {bugs_metrics_value}\n"

156 if last_bugs < bugs_metrics_value:

157 changed = True

158 changes += f" * Bugs have increased :bug: from {last_bugs} to {bugs_metrics_value}\n"

159 if last_code_smells > code_smells_metrics_value:

160 changed = True

161 changes += f" * Code smells have reduced :+1: from {last_code_smells} to {code_smells_metrics_value}\n"

162 if last_code_smells < code_smells_metrics_value:

163 changed = True

164 changes += f" * Code smells have increased :nose: from {last_code_smells} to {code_smells_metrics_value}\n"

165 if not changed:

166 print("Sonar stats have not changed, not sending again")

167

168if add_stats:

169 response = requests.post(f"{api_url}/issues/{args.pr_number}/comments", headers=headers, json=comment)

170

171if changed:

172 new_comment = {"body":f"""

173_Your friendly neighborhood Sonar thinks something has changed!_

174{changes}

175**{metrics_joined}**

176

177See more details at {sonar_link}

178"""}

179 response = requests.post(f"{api_url}/issues/{args.pr_number}/comments", headers=headers, json=new_comment)

180 print(f">>> New Sonar comment: {new_comment['body']}")

##

Workflow Explanation

- Pull Request Initiation: When a developer opens a pull request (PR), the script triggers automatically or upon manual invocation.

- SonarQube Analysis: The script retrieves metrics such as bugs, code smells, and vulnerabilities from the specified SonarQube project associated with the PR.

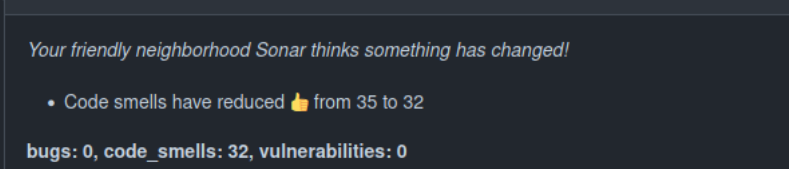

- Comparison and Commenting: What is really neat about our script is that it compares the latest metrics with previously recorded values from SonarQube, that being, an earlier comment from Jenkins in the PR, if any. If metrics change (e.g., increased bugs or reduced vulnerabilities), the script updates comments on the PR to notify developers of the change contextually (e.g bugs increased, code smells decreased, etc).

- Feedback and Action: Developers receive immediate feedback on code quality metrics directly within the PR interface. They can promptly address identified issues, ensuring that only high-quality, secure code is merged into the main branch.

Example of a Sonar comment in GitHub:

##

Developer Benefits

- Immediate Feedback: Developers receive real-time feedback on their code changes, enabling them to address issues promptly before merging code into the main branch. This reduces the likelihood of introducing bugs or security vulnerabilities into the production environment.

- Enhanced Collaboration: By centralizing code analysis results within the PR workflow, the script promotes collaboration among team members. Developers can discuss and resolve issues directly within the context of the PR, streamlining communication and decision-making processes.

- Continuous Improvement: Regular use of static analysis encourages developers to adhere to coding standards and best practices consistently. Over time, this fosters a culture of continuous improvement and learning within the development team and an increase in productivity and right-first-time mindset.

- Risk Mitigation: Early detection and remediation of security vulnerabilities mitigate risks associated with data breaches and compliance violations. This is particularly critical for organizations operating in regulated industries or handling sensitive data and Mydex CIC is proud to be independently certified for the last 11 years under ISO27001 Information Security Management for every aspect of our lifecycle as a company.

#

What’s next for this key stream of work

We are proud of what we have achieved but we want to do more in this area as part of our continual improvement agenda. SonarQube comes with some excellent rule sets for static analysis for most mainstream programming languages.

We are now looking at how and where the analysis is giving us false reporting of issues so we can reduce false flag warnings. This is detailed work because we have to be certain it is a false flag and adjust the rules carefully so we do not allow a vulnerability to be let through.

#

Conclusion

Implementing a static analysis stack enhances code quality, improves security, and accelerates the development process by identifying and addressing issues early in the lifecycle.

We are seeing developers take more attention to code smells and bugs during the development process before opening up a PR for more formal review. This is especially helping avoid bugs being deployed out to our higher environments.